At Kalido, our mission is to connect people with economic opportunities. Many of our features that achieve this are powered and enhanced using clever AI/machine learning algorithms. One of which is our skill matching technology. The skill matcher is sort of like a search engine for finding people based on skills. We allow people to describe the skills they have and the skills they need in whichever way they want and we use AI/machine learning to do the heavy lifting.

I am Bati, a senior data scientist working at Kalido and in this blog post I will try to explain why we use machine learning on this problem, how it works, and showcase some of its true power.

A programmer’s worst nightmare

Imagine for a minute that you are a software programmer and you are tasked with matching people. You have a bunch of people searching for certain terms on the one hand and a bunch of people offering their skills on the other. Your first idea might be to get people to select from a list of approved searches/skills.

When people search for a skill, you just need to see who has listed the same skill on their profile. Simple!

Then comes the real world destroying all your plans. People don’t tend to do well with this restricted sort of searching. For example, a lot of people don’t even know what android sdk is and just want to find someone to develop a mobile app. Some people might call it an app developer, others might say phone app developer or even android programmer.

So now you implement a free text field, users can enter their searches and skills by typing in whatever they want. You add some rules to do the matching, starting with the most obvious: if the search is the same as the skill, you say it’s a match. Next, you can maybe try to get rid of the problem of programmer vs developer by just replacing developer with programmer. You need even more variations on this: programming, development etc.

Once again, the real world strikes back. There is a difference between how technical people describe their skills vs how non-technical people might look for those skills. Say you want someone to build you a website, you might type in “creating websites” or even “website developer” to the search. Most people who might have that skill will likely list it in the form of other technical skills like: angular js, html, css etc. So now you add more rules and more rules as you discover these.

There are more rules to come: similar skills are often described in different ways: java developer, java programmer, full-stack java, java SE 6. So you add substring searching: look for java in the skill if it’s present in the search. After all this, you notice some odd results like a search for java developer matching java coffee beans. So you add more rules to prevent these.

Congratulations, you have made it to programmers’ hell. You will end up with a file with thousands and thousands of rules, which nobody will really know exactly what they are, two hundred thousand lines of code most of which is filled with if statements and a constant source of new things you didn’t initially capture coming up.

Enter machine learning

All that effort in building a rule-based system won’t even net you a good system. In our experience, machine learning models far outperform the rules-based ones. The reason for that is rule-based methods work well on the data that is seen, but there is always oversight on unseen/new data.

The way ML models work in this problem is in two steps:

- Teach the ML model about context. It needs to know that angular js, html, css are all website development tools.

- Use examples you find in the dataset to “fine-tune” the model from step 1) and evaluate its performance.

At a very high level, machine learning models learn by ingesting data. You give it some input data and a target that you want it to predict and from examples where you give it all the data, it trains itself to predict that target. When you want to use the model, you only need to give it the input data and it will spit out target predictions.

For 1) the ML model learns about context by training on a very large database of Wikipedia entries. Going across each sentence in each wiki article, you can leave out some of the words and get the model to try and predict the missing words. This makes the model learn about context. For example, the Wikipedia article about AngularJS starts with:

AngularJS is a JavaScript-based open-source front-end web framework mainly maintained by Google and by a community of individuals and corporations to address many of the challenges encountered in developing single-page applications.

So to fill out the missing words, the machine learning model will figure out that JavaScript and AngularJS are related (as they will be mentioned in the same sentence quite a lot during the article). For step 2), we curate our own data. Basically, we take a whole bunch of examples of searches and skills and get humans to label them a match or not match. We refer to this process as mechanical Ash, because of Mechanical Turk and that one of our co-founders Ashvin labelled a lot of data for us.

The magic of machine learning

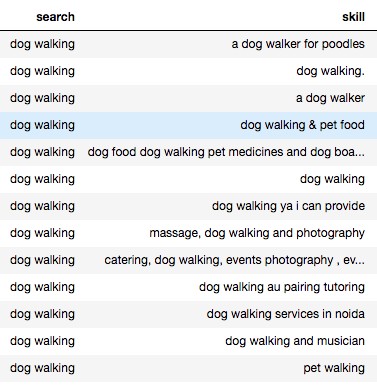

The reason that ML is so hyped at the moment is because it really is amazing. Here are some examples from our own database. Below I put some of the examples for searching for a “dog walker” and we got:

You can see the model captures all the little variations very well.

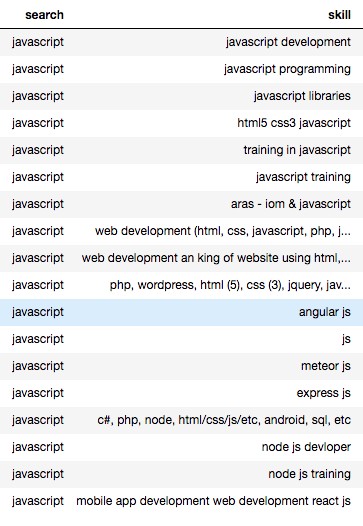

Here is a more technical example. The search term here is “JavaScript” which is a programming language used in designing interactive websites. For this example, the model picks up the abbreviation “js” which is often used, as well as libraries in JavaScript such as AngularJS and ReactJS.

Amazingly, this was done without us explicitly having to tell the model that JavaScript = js or that AngularJS is a particular JavaScript library, it already knew that! Awesome stuff.

There is a huge variety of machine learning models that operate on text data and none of the models are perfect. At Kalido, we strive to improve our models as much as we can. This is a very complex and hard problem in which we are constantly working our magic to advance. Improving our models and our feature set, we are able to deliver more economic opportunities to users, communities, and enterprises. Our machine learning based approach has been key to our success in doing so.